The start_index number is the pagination and can be easily calculated.

#WEBSCRAPER SCRAY HOW TO#

The most important out of these three is the feature_id which I’ll show you how to get in-order to create the get request.

#WEBSCRAPER SCRAY INSTALL#

To install virtualenv: $ pip install virtualenv

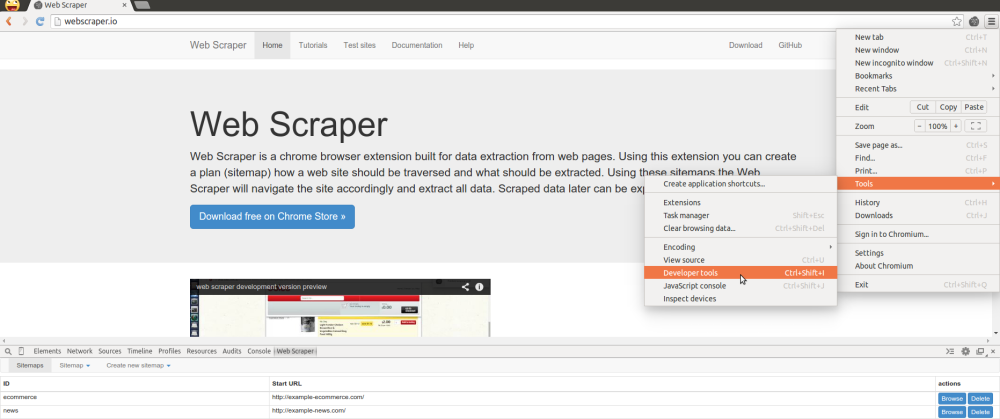

Make sure that you have python3, pip and virtualenv installed on your machine. Note: I won’t be going through how xpath and css selectors actually work so you need to have a basic understanding of them. Postman (we’ll be directly scraping from google’s backend by intercepting the API that they hit).virtualenv (not necessary but we will use it here).Today I’ll show you how to scrape business reviews off of Google. I like to think of the Engine as the spine and the Middleware as the limbs of the scrapy architecture. This processes (step-1 to step-8) repeats until no requests are left in the Scheduler for crawling.The Engine sends the scraped items to the Items (also known as Item Pipeline) and then sends the processed requests to the Scheduler and asks for the next request to crawl.The Spider processes the response and scrapes the required items and the new request for crawling back to the Engine, again passing through the Spider Middleware.After getting the response from the Downloader, the Engine returns the response to the Spider through the Spider Middleware.After the request finishes downloading, the Downloader generates a response, it sends it back to the Engine through the Downloader Middleware.The Engine sends the request off to the Downloader, where the request passes through the Downloader Middleware.The Scheduler throws back the request to the Engine.The Engine schedules the request in the Scheduler and asks for the next request to crawl.The Spider throws the initial request to the Engine to start crawling.It’s an asynchronous framework that comes with a lot of functionality right from the get go as soon as you start the project. Scrapy is a web scraping framework built in python that follow’s the batteries included approach same as Django. For example, if you want to scrape search results off of Amazon and there is pagination so in order to do that, you’ll need to follow up on the pagination links to do that. Web Scraping and Web Crawling go hand in hand when you want to scrape X number of pages. Well as I mentioned above Web Scraping is the process of extracting text from webpages in an automated way and Web Crawling is the process of following up links of your desired choice. There are a number of use cases where web scraping is required such as gathering datasets to train machine learning models, gathering prices of products, gathering emails for lead generation and much more… What is the difference between web crawling and web scraping?Ī lot of people confuse web crawling with web scraping and vice versa.

Have you ever wondered how Google fetches data and indexes it into its search engine? Well they do web scraping and crawling.

Web Scraping is the process of extracting text from webpages in an automated way. Web Scraping has been a very hot topic for quite some time now, so let’s talk about what it actually is.

0 kommentar(er)

0 kommentar(er)